Data Mining(Nursey data set)

1. Selecting an appropriate data set

Description about the data set

Nursery data set was taken from UCI Machine Learning Repository. Nursery database was derived from a hierarchical decision model originally developed to rand applications for nursery schools and it was used during several years in 1980’s when there was excessive enrollment to these schools in Ljubljana, Slovenia and the rejected applications frequently needed an objective explanation. Here the final decision depended on 3 subproblems that are occupation of parents and child’s nursery, family structure and financial standing and social and health picture of the family. This Nursery dataset is a multivariate dataset with categorical data type values(except 1 attribute). And it consists with 8 attributes and 12960 instances. Below shows the table with attributes information, (UCI Machine Learning Repository: Nursery Data Set, 2021)

Table 01 : Attribute information of the dataset

|

Attribute |

Description |

Values |

|

Parents |

Parent’s occupation |

usual,pretentious,great_pret |

|

Has_nurs |

Child’s nursery |

proper,less_proper,improper,critical,very_crit |

|

Form |

Form of the family |

complete,completed,incomplete,foster |

|

Children |

Number of children |

1,2,3, more |

|

Housing |

Housing condition |

convenient,less_conv,critical |

|

Finance |

Financial standing of the family |

convenient,inconv |

|

Social |

Social condition |

non_prob,slightly_prob,problematic |

|

Health |

Health condition |

recommended,priority,not_recom |

Below show the Class distribution table(number of instances per class) and by class describe evaluation of application for nursery school,

Table 02 : Class distribution

|

Class |

Number of instances(N) |

N[%] |

|

not_recom |

4320 |

33.333 |

|

recommend |

2 |

0.015 |

|

very_recom |

328 |

2.531 |

|

priority |

4266 |

32.917 |

|

spec_prior |

4044 |

31.204 |

Reasons for selecting Nursery dataset

· There are 12960 instances(normally it means , large number of instances) in this dataset. So it can be generated better rules

· Originally association rule mining designed to work with categorical data and because of Nursery dataset’s attribute characteristics is categorical, it’s easy to implement this dataset

· There are no any missing values in this dataset

2. Preparing and preprocessing the data

If there are missing/garbage values in the selected dataset, there may be some issues with performing the data mining tasks. But , in this selected Nursery dataset hasn’t any missing/garbage values. So there may not be issues with performing data mining tasks (like, performing algorithms).Association rule mining rule can only be performed on categorical data. There is 1 string data type attribute(children attribute) in this Nursery dataset. So it must be converted into nominal(categorical).Below shows procedure to perform that task,

Figure 01 : Before converting children attribute to nominal(current data type – string)

Ø Filter that use : weka.filters.unsupervised.attribute.StringToNominal

Ø Command that use : StringToNominal-R 4

Ø Object Editor settings :

· Change “attributeRange” box value to “4” as below Figure 02 and click OK. Then click “Apply” in the Filter panel

Figure 02 : Object Editor StringToNominal

Figure 03 : After converting children attribute to nominal(new data type – Nominal)

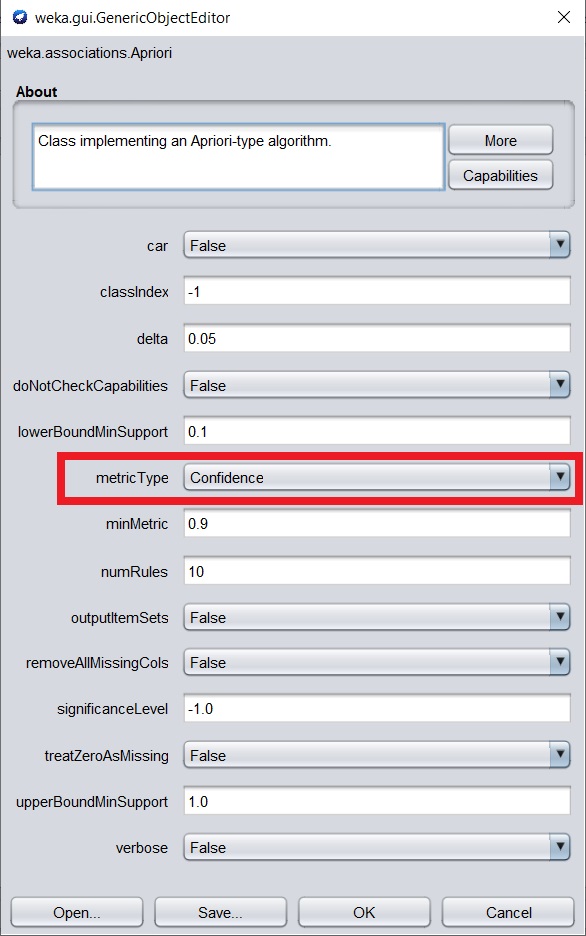

Algorithm use for discovery association rules

Ø Algorithm use for association rule mining : Apriori Algorithm

Apriori Algorithm metric type as Confidence

§ Command that use : Apriori -N 10 -T 0 -C 0.9 -D 0.05 -U 1.0 -M 0.1 -S -1.0 -c -1

§ Object Editor settings : Set the parameters as below figure 04

Figure 04 : Metric type as “Confidence” in ObjectEditor

3. Finding rules and determining the interesting rules

Summery (Apriori Algorithm metric type as confidence)

§ Minimum support: 0.1 (1296 instances)

§ Minimum metric <confidence>: 0.9

§ Number of cycles performed: 18

§ Generated sets of large itemsets:

§ Size of set of large itemsets L(1): 30

§ Size of set of large itemsets L(2): 137

§ Size of set of large itemsets L(3): 12

Number of best rules found : 10 (Here it can be generated maximum 24 rules but it set to generate only first 10 best rules)

* Below rules , “class” represents the evaluation of application for nursery school

1. 1. class=not_recom 4320 ==> health=not_recom 4320 <conf:(1)> lift:(3) lev:(0.22) [2880] conv:(2880)

§ This means if evaluation not recommend then health is not recommended.

§ The confidence of this rule is 1 that means 100% instances are there where this rule found to be true

§ The lift value is 3 means that relationship between L.H.S. and R.H.S. of the above equation is more significant (that means L.H.S. of the equation makes high impact on R.H.S. of the equation)

§ The leverage and conviction values are 0.22 and 2880 respectively

2. 2. health=not_recom 4320 ==> class=not_recom 4320 <conf:(1)> lift:(3) lev:(0.22) [2880] conv:(2880)

§ If health is not recommended then evaluation not recommended

3. 3. finance=convenient class=not_recom 2160 ==> health=not_recom 2160 <conf:(1)> lift:(3) lev:(0.11) [1440] conv:(1440)

§ If finance is convenient and evaluation is not recommended then the health is not recommended

4. 4. finance=convenient health=not_recom 2160 ==> class=not_recom 2160 <conf:(1)> lift:(3) lev:(0.11) [1440] conv:(1440)

§ if finance is convenient and health is not recommended then the evaluation is not recommended

§ Here confidence,lift,leverage and conviction values are 1,3,0.11 and 1440 respectively

5. 5. finance=inconv class=not_recom 2160 ==> health=not_recom 2160 <conf:(1)> lift:(3) lev:(0.11) [1440] conv:(1440)

§ If finance is inconvenient and evaluation is not recommended then the health is not recommended

6. 6. finance=inconv health=not_recom 2160 ==> class=not_recom 2160 <conf:(1)> lift:(3) lev:(0.11) [1440] conv:(1440)

§ If finance is inconvenient and health is not recommended then the evaluation is not recommended

7. 7. parents=usual class=not_recom 1440 ==> health=not_recom 1440 <conf:(1)> lift:(3) lev:(0.07) [960] conv:(960)

§ If the parents’ occupation usual and evaluation is not recommended then the health is not recommended

8. 8. parents=usual health=not_recom 1440 ==> class=not_recom 1440 <conf:(1)> lift:(3) lev:(0.07) [960] conv:(960)

§ If parents’ occupation usual and the health is not recommended then the evaluation is not recommended

9. 9. parents=pretentious class=not_recom 1440 ==> health=not_recom 1440 <conf:(1)> lift:(3) lev:(0.07) [960] conv:(960)

§ If parents’ occupation pretentious and the evaluation is not recommended then the health is not recommended

10 10. parents=pretentious health=not_recom 1440 ==> class=not_recom 1440 <conf:(1)> lift:(3) lev:(0.07) [960] conv:(960)

§ If parents’ occupation pretentious and health is not recommended then the evaluation is not recommended

Interesting rules which are useful to client

1. health=not_recom 4320 ==> class=not_recom 4320 <conf:(1)> lift:(3) lev:(0.22) [2880] conv:(2880)

Reason : The confidence of this rule is 100%. That means that 100% of the instances are there where this rule found to be true. It means, there is a almost true probability(because 100%) for the not recommendation for nursery evaluation because of the not recommended health condition. And the lift value is 3 means health condition has more impact to not recommended the nursery evaluation.

2. finance=convenient health=not_recom 2160 ==> class=not_recom 2160 <conf:(1)> lift:(3) lev:(0.11) [1440] conv:(1440)

Reason : This rule also has a confidence of 100%. It means, there is a almost true probability (because 100%) for the not recommendation for nursery evaluation because of the not recommended health condition and even if the finance is convenient. And the lift value is 3 means health condition and finance standing of the family have more impact to not recommended the nursery evaluation.

3. finance=inconv health=not_recom 2160 ==> class=not_recom 2160 <conf:(1)> lift:(3) lev:(0.11) [1440] conv:(1440)

Reason : This rule also has a confidence of 100%. It means, there is a almost true probability (because 100%) for the not recommendation for nursery evaluation because of the not recommended health condition and finance is inconvenient. And the lift value is 3 means health condition and finance standing of the family have more impact to not recommended the nursery evaluation.

4. parents=usual health=not_recom 1440 ==> class=not_recom 1440 <conf:(1)> lift:(3) lev:(0.07) [960] conv:(960)

Reason : This rule also has a confidence of 100%. It means, there is a almost true probability (because 100%) for the not recommendation for nursery evaluation because of the not recommended health condition and even if the parent’ occupation is usual. And the lift value is 3 means health condition and parents’ occupation have more impact to not recommended the nursery evaluation.

5. parents=pretentious health=not_recom 1440 ==> class=not_recom 1440 <conf:(1)> lift:(3) lev:(0.07) [960] conv:(960)

Reason : This rule also has a confidence of 100%. It means, there is a almost true probability (because 100%) for the not recommendation for nursery evaluation because of the not recommended health condition and the parents’ occupation is pretentious. And the lift value is 3 means health condition and parents’ occupation have more impact to not recommended the nursery evaluation.

Recommendations

With the use of important rules that were declared above, following recommendation can be given to students that hope to enroll for nursery school.

![]() # Above

all the important rules given that it must be a good health condition in order

to enter the nursery school

# Above

all the important rules given that it must be a good health condition in order

to enter the nursery school

![]() # It’s

better if the parents’ occupation is usual and not pretentious

or great pretentious

# It’s

better if the parents’ occupation is usual and not pretentious

or great pretentious

![]() # It’s

better if the financial standing of the family is convenient

# It’s

better if the financial standing of the family is convenient

![]() # Also it can be seen that child nursery ,

Form of the family ,Number of children, Housing condition and Social condition

are not effected with those generated rules. So the important thing is to

consider above first 3 points mainly for students that enroll to the nursery

school

# Also it can be seen that child nursery ,

Form of the family ,Number of children, Housing condition and Social condition

are not effected with those generated rules. So the important thing is to

consider above first 3 points mainly for students that enroll to the nursery

school

References

§ Tutorialspoint.com. 2021. Weka - Association - Tutorialspoint. [online] Available at: <https://www.tutorialspoint.com/weka/weka_association.htm> [Accessed 4 May 2021]

§ Archive.ics.uci.edu. 2021. UCI Machine Learning Repository: Nursery Data Set. [online] Available at: <https://archive.ics.uci.edu/ml/datasets/nursery> [Accessed 4 May 2021]

§ 2021. [online] Available at: <https://www.researchgate.net/publication/336551606_Discovering_Rules_for_Nursery_Students_using_Apriori_Algorithm> [Accessed 5 May 2021]

§ Weka.sourceforge.io. 2021. Apriori (weka-dev 3.9.5 API). [online] Available at: <https://weka.sourceforge.io/doc.dev/weka/associations/Apriori.html> [Accessed 8 May 2021]

Comments

Post a Comment